Anthony Elliott, Algorithms of Anxiety: Fear in the Digital Age (Polity Press, 2024)

Reviewed by James Smithies (Australian National University)

(This is a prepublication version of this review. You can find the published version in Thesis Eleven Journal, on the T11 Sage website)

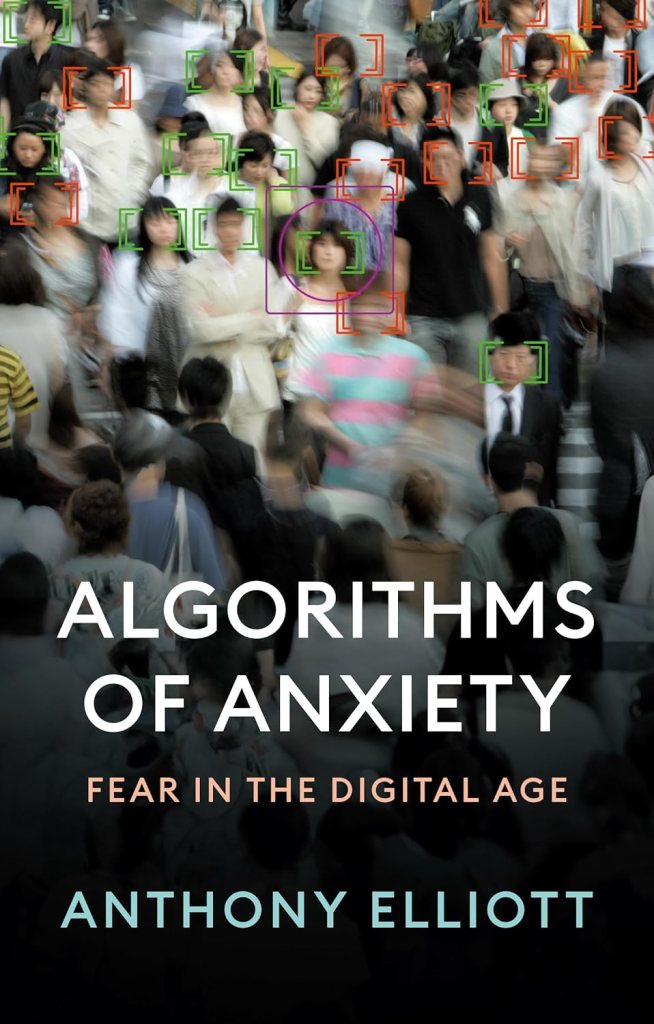

Anthony Elliott’s Algorithms of Anxiety: Fear in the Digital Age is a confronting book that forces readers to reconsider their relationship to digital culture. The power of Elliott’s approach lies in his liberal use of Freudian analysis to demonstrate the connection between algorithms and what Reinhart Koselleck referred to in 1988 as the “pathogenesis” of modern society (2015). This results in a view of contemporary society that binds together technology, capitalism, anxiety, fear, and violence. Elliott’s central claim is that “…fear in the digital age is not just a matter of cognitive distortion or derailed belief systems, but rather goes all the way down into our deepest emotional sensibilities and affective commitments” (158). The concluding chapter is particularly grim, offering a “provisional catalogue of the fears we see erupting in the algorithmic age” (161).

Analysis of algorithmic culture is well established, building on seminal work from writers including Frank Pasquale (2015) and Taina Bucher (2018). Other work (Mackenzie, 2017) deconstructs algorithms from more technical perspectives, acknowledging their centrality to computer science and machine learning. Algorithms of Anxiety offers an important contribution to the wider genre, as well as contributing to the sociology of modernity by defining a new mode of ‘algorithmic modernity’. Using Freudian analysis, Elliott positions algorithmic modernity as an extension of twentieth century mass culture, over-shadowed by the looming existential fear of Artificial General Intelligence (AGI). This is a bold approach, aligning algorithmic criticism to traditional socio-historical analysis and making a strong argument for the continued analysis of modernity. Like Kyle Chayka’s Filterworld (2024) Elliott’s book suggests there is still some way to go before we will fully appreciate (and perhaps make peace) with algorithms as mediating components of daily life.

Analysis of the Netflix algorithm and an eviscerating chapter on the Netflix show Squid Game extends Elliott’s argument towards ‘the lethal ecstasy of algorithmic violence’. Interpreting the violence of the show through the Freudian lens of Eros and Thanatos, Elliott suggests that “Squid Game’s retrotopian, high-tech take on death games graphically captured contemporary women and men at war with both themselves and with society” (85). Algorithms are thus wired into our individual and collective death drives in a way Feodor Dostoevsky and Franz Kafka (both referred to in earlier sections of the book) would understand. This is unremittingly powerful stuff, and important in its continuation of the received discourse of modernity, including reference to Jurgen Habermas, Pierre Bourdieu, and Jean Baudrillard. Elliott’s argument that social theory needs to be upgraded to account for algorithmic culture, with more focus on relational materialism and a more sophisticated understanding of socio-technical experience, is well made. His use of theorists such as Niko Pajkovic suggests how this can be done but could have been enhanced through discussion of writers such as N. Katherine Hayles, whose work on nonconscious cognition aligns well to Elliott’s intuitions (2017).

The negativity of Elliott’s mode of algorithmic criticism is problematic, however. It’s difficult to unpack why the genre is so negative but I suspect it often makes a category error, mistaking a component of digital technology for the whole. This results in a sense of powerlessness and thorough-going negativity about contemporary culture. Algorithms are, after all, only one component in a much larger technical infrastructure comprising (variously) software, interfaces, Application Programming Interfaces (APIs), Software Development Kits (SDKs), networks, servers, data warehouses, microchips. Algorithms are deployed within a large digital infrastructure that implies a larger digital (rather than merely algorithmic) modernity. That larger socio-technical reality is opaquer than its component parts and includes the rhetoric of Silicon Valley marketing and billionaires alongside purely technical aspects, but it is a more appropriate fit to the philosophical discourse of modernity because of that. Shannon Vallor confronts this problem in The AI Mirror (2024), noting the many problems with contemporary digital culture but claiming that “[t]oday’s rising chorus of public distrust of technical and scientific expertise leads to reactive fears and resentments of technology itself…” (172). At some point we need to ask what our overall goal is: to penetrate deep into the depressive corners of our contemporary zeitgeist, in the manner of Joseph Conrad in Heart of Darkness (1899) or Francis Ford Coppola in Apocalypse Now (1979), or find modes of analysis (and applied practice) that can help us bend the arc of technology development in a more positive direction?

Both modes of analysis are of course needed, and in some ways, Elliott’s is the braver mode because it risks empowering the very “dark enlightenment” (Metz, 2021) it critiques in the interests of disinterested enquiry, but I wonder if our moment is too febrile for that kind of analysis. We desperately need to build a sense of agency over technology, and to reduce the discourse of fear Elliott so unremittingly documents. Where are the stories documenting the use of digital media to promote political activism (Gerbaudo, 2012; Tufekci, 2017), the resistance of rural Chinese communities to mobile phone use (Oreglia, 2013), or the use of digital platforms to promote sustainable journalism in Africa (Elega, 2024)? There are many positive examples of technology use in the global south we can look to, and it is revealing that negative critiques from the global north infrequently factor these in (Arora 2019; 2024). This is not to suggest that digital colonialism isn’t a major problem, or that the powers of surveillance capitalism can be easily tamed, but the use of digital media is never straight-forwardly positive or negative and opportunities do still exist for positive social and political outcomes. The depressing sense of determinism that algorithmic analysis creates hides opportunities for creativity and growth. As Wendy Chun cogently noted in Discriminating Data (2021), if we view algorithms as historically mediated and human-designed artefacts (as historically contingent modes of computational logic) we have a greater chance of improving and regulating them.

Algorithms of Anxiety is an important reflection of the discourse of pathogenesis referred to by Koselleck in 1988. It places digital culture and users on the couch and subjects them to analysis in the grand tradition of twentieth century cultural critique. If component analysis promotes a sense of powerlessness and highlights the fear and anxiety that continue to stalk modernity, this is not entirely a bad thing. Contemporary algorithmic experience is deeply troubling, and it is having a profound effect on our mental health and democratic processes. Elliott’s book confronts us with the Freudian depths of our socio-technical experience, and in doing so offers another wake-up call. Algorithms of Anxiety is not an easy read, but it is an important contribution to the discourse of modernity. It is important we get the analytical balance right and avoid making the same mistakes as the giants of twentieth century critical theory, though. Computational rationality can support planetary flourishing if we get the terms of engagement right.

References

Arora, P. (2019). The next billion users: Digital life beyond the West. Cambridge, MA: Harvard University Press.

Arora, P. (2024). From pessimism to promise: Lessons from the Global South on designing inclusive tech. Cambridge, MA: The MIT Press.

Bucher, T. (2018). If… then: Algorithmic power and politics. Oxford: Oxford University Press.

Metz, C. (2021). ‘Silicon Valley’s safe space’, The New York Times, 13 February, Technology section. Available at: https://www.nytimes.com/2021/02/13/technology/slate-star-codex-rationalists.html (Accessed: 4 December 2024).

Chayka, K. (2024). Filterworld: How algorithms flattened culture. New York, NY: Doubleday Books.

Chun, W.H.K. (2021). Discriminating data: Correlation, neighborhoods, and the new politics of recognition. Cambridge, MA: The MIT Press.

Elega, A.A., Munoriyarwa, A., Mesquita, L., Gonçalves, I. and de-Lima-Santos, M.-F. (2024). Empowerment through sustainable journalism practices in Africa: A walkthrough of EcoNai+ and Ushahidi digital platforms. Journalism Practice, pp. 1–21.

Gerbaudo, P. (2012). Tweets and the streets: Social media and contemporary activism. Illustrated edn. London: Pluto Press.

Hayles, K. (2017). Unthought: The power of the cognitive nonconscious. Chicago: The University of Chicago Press.

Koselleck, R. (2015). Critique and crisis: Enlightenment and the pathogenesis of modern society. Translated by B. Gregg. Originally published in 1988. Cambridge, MA: The MIT Press.

Mackenzie, A. (2017). Machine Learners: Archaeology of a Data Practice. Cambridge, MA: The MIT Press,

Oreglia, E. (2013). When technology doesn’t fit: Information sharing practices among farmers in rural China. In Proceedings of the sixth international conference on information and communication technologies and development: Full papers – volume 1, ICTD ’13. New York, NY: ACM. pp. 165–176.

Pasquale, F. (2015). The black box society: The secret algorithms that control money and information. Cambridge, MA: Harvard University Press.

Tufekci, Z. (2017). Twitter and tear gas: The power and fragility of networked protest. New Haven, CT: Yale University Press.

Vallor, S. (2024). The AI mirror: How to reclaim our humanity in the age of machine thinking. New York, NY: Oxford University Press.